Seamless Interaction: Dyadic Audiovisual Motion Modeling and Large-Scale Dataset

Technical Report

I am an AI Research Scientist at Meta. I obtained my PhD in AI at HKUST in 2025, advised by Prof. Qifeng Chen. I previously obtained my Bachelor's degree with highest honor from Computer Science program at Hongyi Honor College, Wuhan University (WHU) in China.

I was fortunate to have two Research Scientist Internships at Meta in 2025 and 2024, focusing on Social AI Agents and Multimodal Large Language Models (MLLMs). In Fall 2023, I visited Prof. Xiaolong Wang's group at UCSD. Before that, I was a Research Intern at the International Digital Economy Academy (IDEA), mentored by Dr. Yu Li, where I worked on digital humans and motion generation. Before joining HKUST, I was fortunate to work with Prof. Qi Dou, Prof. Junsong Yuan, and Prof. Tomizuka.

My recent research interests include:

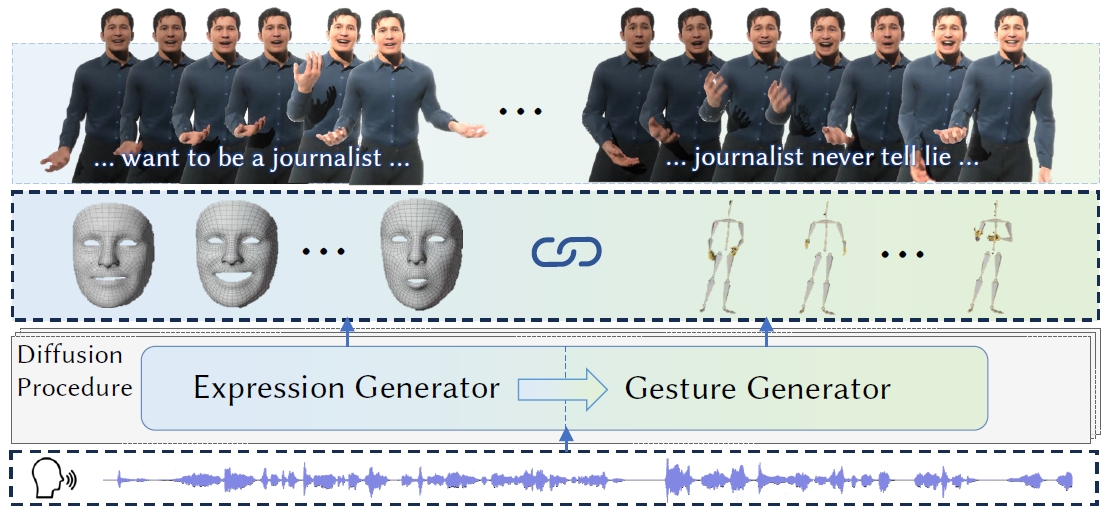

Developing novel generative AI models for multimodal content creation, including audio, video, and 3D generation.

Building and advancing Multimodal Large Language Models that can understand and generate across multiple modalities.

Creating AI agents capable of natural social interactions, including conversational dynamics and human-like behaviors.

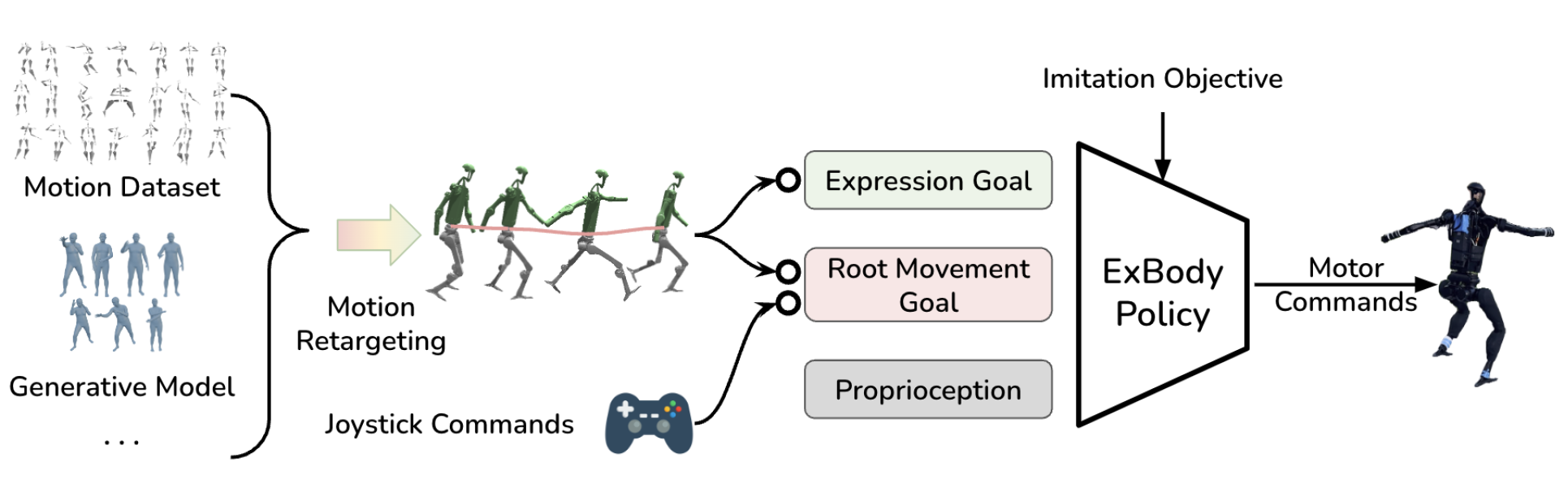

Developing realistic digital humans with expressive gestures and motion generation, and embodied AI systems.

For a complete list, please visit my Google Scholar profile.

Technical Report

CVPR 2024 (Acceptance rate: 23.6%)

WACV 2023 (First round acceptance rate: 22.23%)

ACM Multimedia 2022 (Acceptance rate: 27.9%)

Medical Image Analysis 2021

Fall 2022, HKUST

Deep Learning in Computer Vision

Spring 2022, HKUST

Advanced Deep Learning Architectures

CVPR, Siggraph, AAAI, ACM Multimedia, and WACV

I have been playing the violin since 8, and I love singing also.

I love table tennis and served on the table tennis team of the CS department at WHU.